Artificial intelligence for Dummies

Last Nekst, Ennia gave us all an introduction to Machine Learning. Thistime , we are going to look at a closely related field (or buzzword?): Artificial Intelligence, or AI. On the daily you see tons of news items related to AI, ranging from self-driving cars to negotiating robots. But how does it relate to other fields like Machine or Deep learning? What are the dangers? This and more in AI for Dummies!

Text by: Thomas van Manen

To get a better understanding of what the hype around AI entails, a good starting point would be to look at a formal definition. Wikipedia describes it as: Artificial Intelligence is intelligent behavior by machines, rather than natural or organic intelligence of humans or other animals. (…) Any device or algorithm that perceives its environment and takes action that maximizes its chance of success at some goal. We saw last Nekst that Machine Learning could be a field where machines are able to learn specific tasks or patterns by means of data. Therefore, you could say that machine learning is the process of learning intelligent behavior (i.e. Artificial Intelligence) by means of data.

Checkers and threats

The birth of AI is generally accepted to be in 1956, when a professor from Dartmouth College decided to organize a conference to clarify and develop ideas on thinking machines. He dubbed this field Artificial Intelligence. Originating from ideas put forward duringthis conference, numerous brilliant programs were created. Computers were able to learn checkers strategies, prove theorems, or even speak English. It went so well that by the middle of the sixties, the founders of AI predicted that within twenty years, a computer would be able to do any work a man can do.

However, this prediction turned out to be far too generous, as in the seventies people learned that some tasks may be more difficult for AI than others. The toy problems AI had been dealing so far with such as checkers were still incomparable to the grand real-world problems. Critics were very eager to point his out. This slowed down progress, and in 1974 in response to these critics and pressure from politics, most of the funding for AI was cut off in the UK and the USA. This period would come to be known as the AI winter, where getting funding for AI was near impossible.

In the years that followed, AI experienced more downfalls, but also successes. A famous example is the Deep Blue computer. It was the first computer that was able to beat a world class chess champion, Garry Kasparov, in 1997. In recent years, AI has gained enormous boosts due to the access to huge amounts of data, faster computers and more advanced statistical techniques. This has led to many cool applications of AI, some of which you can read about later in this article.

However, the developments of AI have also led to critics that fear the potential of AI. These critics have been there since the very beginning of AI. A famous example is the film: 2001: A Space Odyssey, where the intelligent computer HAL 9000 decides to kill the people that try to shut him down, because they would hinder his programmed objectives.

These fears are not only a thing from the past. These days there are also critics pointing out the dangers of AI, the most famous one being the entrepreneur Elon Musk. He is a stern advocate of regulating AI as soon as possible, and has compared work on AI to “summoning the demon”. He fears that we will only realize the importance of regulation when robots go down the street killing everyone, at which point we would be way too late.

From science-fiction to reality

The kind of AI Elon Musk fears is only one of two kinds of AI. The one he fears is called general AI or strong AI. It refers to AI capable of performing general intelligent actions. Some definitions even state that for strong AI, machines should experience consciousness. It describes AI that is not specifically programmed for one action. This is the kind of AI that the founders of AI envisioned, but turned out to be very difficult to achieve.

There are various criteria to determine whether a certain AI application can be called a strong AI. A famous criterium which Ennia explained last Nekst is the Turing test. However, there are many (funny) tests: one example is that a machine with strong AI should be able to enter an average home and be able to make a cup of coffee using the tools available in the home. Another one is that a machine with strong AI should be able to enroll in a university program and obtain the same degree a human would obtain.

To this day, this kind of AI has only appeared in science-fiction films like The Matrix and iRobot. However, the other kind of AI, weak AI, is definitely a present-day reality. Weak AI is AI that is focused on one narrow task. A well-known example of narrow AI are personal assistants like Siri or Google Assistant. While these assistants seem to operate with some intelligence, they are very fixed to certain tasks an assistant would do, such as checking the weather, or making an appointment in your agenda. Siri would not be able to make you a cup of coffee or obtain a degree.

Deep Learning, the magic behind it all?

A lot of the cutting-edge AI examples we will see relies on Machine Learning. It mostly relies on one specific part of Machine Learning: Deep Learning. Deep Learning is one of these terms that received tons of hype over the past years. To get to Deep Learning, we would first need to take a step back. From the beginning of Machine Learning an algorithm called an Artificial Neural Network (ANN) had existed. ANNs are inspired by our understanding of the brain; an immense number of neurons that are all interconnected.

You might, for instance, take an image, cut this image into tiles, and feed each of these tiles to the first layer of the ANN, the input layer. These neurons then pass the data through to the second layer of neurons. This layer does its operations, and so forth, until the final layer, the output layer, is reached. Each neuron gives a weight to its input, and by combining all those weight a final output can be made.

However, until recently, implementing these ANNs seemed to be downright impossible. To be able to build even the simplest of an ANN, you would need a lot of computing power, and this was simply not available. Also, the ANN needs to be fed with a lot of data. If you would want to classify, for instance, a cat in a picture, you would need preferably hundreds of thousands of pictures of cats to get the correct weights on the neurons, resulting in a correct classification.

The next step is taking these ANNs, and making them huge, so increasing the number of layers and neurons the data needs to be fed through. This requires tons of data, as well as a lot of computing power. This is what is called Deep Learning and lies at the foundation of most of the cutting-edge AI that is currently being developed.

To conclude: AI has made tremendous strides in the last few years. With the possibilities of modern computing power and the resulting rise of Deep Learning, AI may finally be able to get to the state envisioned by science-fictions movies. While there is of course the doom scenario of robots taking over the world, I am mostly excited to have a C-3PO at my doorstep soon!

Cool examples:

DeepMind AlphaGo Zero

While computers have beaten the best in games like checkers or chess a long time ago, for the ancient Chinese game of Go, this had been an unachievable task until recently. Since the number of scenarios at each point in the game is a lot higher in Go than in checkers or chess, it is a lot harder for a computer. One game of Go can have more possible outcomes than there are atoms in the universe. What makes the DeepMind AlphaGo Zero so special is that it uses a completely different way to learn the game. Previous versions of AlphaGo developed its strategies by training on millions of moves from previously played games by professionals. AlphaGo Zero does not use any data from previous real games. Instead, DeepMind simply gives the rules of Go to the algorithm, and the algorithm then figures out optimal strategies by playing versus itself. This technique is called Reinforcement Learning. If you want to learn more, go to: https://deepmind.com/blog/alphago-zero-learning-scratch/

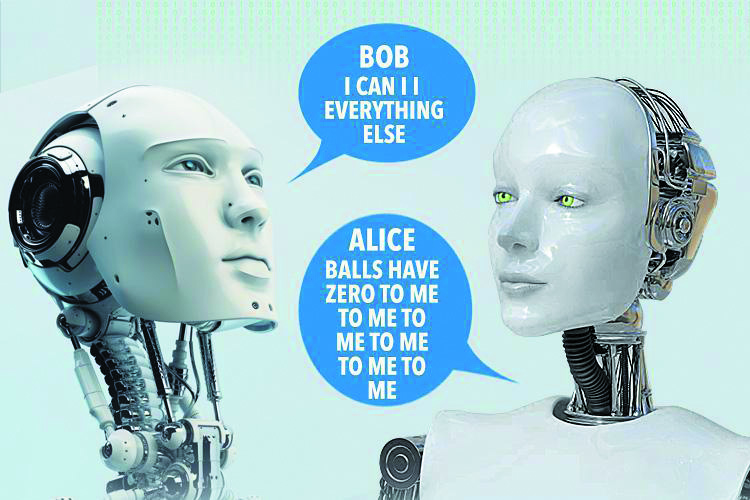

Negotiating chatbots

Recently, Facebook designed two chatbots, Alice and Bob, to negotiate with each other. A couple of mind-boggling results came from this. The first remarkable thing is that the bots seemed to be quite skilled at deal making. For instance, they were able to feign interest in something that had no value to the bot, only to be able to give it up later as a “compromise”. Basically, they taught themselves to lie. However, the most bizarre result was that the bots seemed to start very weird conversations like:

Bob: “I can can I I everything Else.”

Alice: “Balls have zero to me to me to me to me to me to me”

To make the negotiation process more smoothly, they diverted to their own brand-new language! This happened without any input from the supervisors. Since the goal of this research was to create bots that could communicate with actual humans, this project was unfortunately shut down after this.

Walking AI

Another example from our friends at DeepMind. One of the fields of intelligence is physical intelligence, for instance a football player being able to dodge a slide tackle, or a monkey swinging through trees. It basically consists of being able to move throughout a complex and changing environment. DeepMind developed AI that is able to “walk” given only the simplest of directives. They simulate agents with certain bodies, for instance, a blob with two legs, and give only the objective to move forward without falling. Given these very simple instructions, the agents learned complex skills such as jumping or climbing to move forward. For a short film showing the walking robots, go to: https://www.youtube.com/watch?v=gn4nRCC9TwQ

Automated Machine Learning

Over the past few years, a lot of companies have implemented Machine Learning in their daily businesses to optimize all sorts of processes, such as customer targeting, optimal pricing, or recommending certain products. However, choosing the correct algorithm to perform such a task can be very hard for a Data Scientist. Without the proper experience, and even with that experience, it might take a lot of time. Given that the number of Data Scientists is limited, Automated Machine Learning solves this problem by automatically preprocessing the data, selecting various features, choosing the best model, and then selecting the best hyperparameters for this model. With Automated Machine Learning, smaller companies that are not able to hire an entire data science department are still able to obtain relevant insights, by using AI to select the correct data science “pipeline”.