Estimation of the Refinery Planning Optimization

The refining industry has to deal with assessing the value they gain, and are thus willing to pay maximally, from each of the crudes they purchase; a complicated process due to the many different production processes within a refinery. Assessing which refinery planning is optimal on the basis of such aspects therefore takes a long time. This report discusses the way in which we, together with ORTEC, will attempt to estimate the refinery planning optimization and thereby reduce the computation time required, such that the refinery has more up-to-date information. Some difficulties inherent to the methodology applied in the refining business will be outlined, together with the proposed solution.

Basics of the refining business

Refineries, the large metal factories we all most likely know due to their appearance rather than their functionality, rework crude oils (also simply referred to as crudes) into products such as kerosine, gasoline, but also lesser known products such as needle coke, which finds its application in the production of electrodes. While the way these are made is a different subject altogether due to the fact that it is a combination of many difficult chemical processes, it is important to realize that the crudes a refinery uses are more than just simple black substances that are drilled up from the ground; each crude has its own set of characteristic values. These concern aspects such as the percentage amount of kerosine or residue they contain, but also their sulphur content, octane content, and many other characteristics. All of these characteristics are well documented as many are important to some degree in either the process of refining or the maintaining of regulations that are set upon all manner of aspects within the refining business. Since not all crudes are the same in these aspects the quantities of each crude can have significant impact on the refinery planning that is created by the optimization process. Our main driver of the estimation will come from these aspects, assessing scenarios where certain crudes are available in smaller or larger quantity than originally expected.

Not only do crudes differ among each other, also refineries are heterogeneous: they can not only contain different distilling units, but are also able to drastically change their setup from one month to the next. For example, they can first focus mainly on fuel oils, and afterwards shift their focus to the production of butanes. In this way, they are in some sense able to respond to the developments of the market, and produce the products with highest demand.

This heterogeneity in the aspects of the refining business can cause the price a certain refinery is willing to pay for a crude to differ from the market price. For example, if a refinery efficiently produces mainly naphtha it might be willing to pay more for crudes that are particularly suitable for this purpose, while a refinery that produces butanes has considerably less use for such crudes and thus has a willingness to pay for those crudes that is lower than the market price. Similarly, crude oils are in some regard substitutes to each other, and thus if one is relatively cheap compared to its substitute, a refinery might be inclined to buy the former. This can have significant effects on what the outcome of the optimal refinery plan will be, and thus needs to be taken into account when we estimate it.

The model

The process of creating the refinery plan can be very time consuming, as it is the result of a very large optimization problem, and usually needs to be done seperately for every scenario the refinery is interested in. ORTEC therefore wants to create a method to accurately estimate the planning outcome (particularly projected profits) in a fraction of the time it currently takes. This will enable a refinery to perform their planning more often and have them more up to date with regards to developments in the market and refinery itself.

The optimization process for creating the refinery planning contains a very large amount of inputs: variables such as the characteristics of the crudes, price information, and many parameters describing the setup of the refinery. The proposed method of approach to this problem will be to construct a metamodel – a model of the model. This practice is commonly applied in the field of simulation, when the true model takes a long time to run. Thus, instead a simplified model is created that is able to obtain a reasonably accurate outcome in a fraction of the time the true model would need. This model takes as its own inputs a set of variables ![]() and their known true model outcomes

and their known true model outcomes ![]() . This could either be historical data or certain points of data that are calculated exactly by the optimization process and consequently used for estimating the other points in the same time period. The use of historical data, however, does come with the condition that it is a valid representation of the refinery as it is in the present and future. The reason this is unsure is because most refineries are able to restructure their setup relatively fast, and could thus from one month to another completely shift their focus to producing more of another type of refinery product. This might invalidate relationships between input variables and output variables that we deduced from historical data.

. This could either be historical data or certain points of data that are calculated exactly by the optimization process and consequently used for estimating the other points in the same time period. The use of historical data, however, does come with the condition that it is a valid representation of the refinery as it is in the present and future. The reason this is unsure is because most refineries are able to restructure their setup relatively fast, and could thus from one month to another completely shift their focus to producing more of another type of refinery product. This might invalidate relationships between input variables and output variables that we deduced from historical data.

The result of the metamodel will be a model that is able to make an approximation of![]() in the form of

in the form of ![]() . There are multiple ways this can be achieved as there are a number of different metamodeling techniques, but the method that our main focus will be on is kriging. This is a technique that, based on data points that we have information about, will estimate other points of interest according to the following equation:

. There are multiple ways this can be achieved as there are a number of different metamodeling techniques, but the method that our main focus will be on is kriging. This is a technique that, based on data points that we have information about, will estimate other points of interest according to the following equation:

(1) ![]()

where ![]() denotes a known independent basis function, and

denotes a known independent basis function, and ![]() is an unknown parameter, the coefficient corresponding to the basis function.

is an unknown parameter, the coefficient corresponding to the basis function. ![]() is a Gaussian process defined by

is a Gaussian process defined by ![]() , with

, with ![]() being a stationary covariance function, also referred to as a covariance kernel. It is important to realize that where most conventional metamodels assume that

being a stationary covariance function, also referred to as a covariance kernel. It is important to realize that where most conventional metamodels assume that ![]() are independent and identically distributed (usually

are independent and identically distributed (usually ![]() ), kriging assumes the errors are not independent at all, but are a systematic function of

), kriging assumes the errors are not independent at all, but are a systematic function of ![]() . The basis functions

. The basis functions ![]() model the way the mean behaves; if for these basis function we take a zeroth order polynomial we reduce the kriging model to an ordinary kriging method, where the outcome is modelled as

model the way the mean behaves; if for these basis function we take a zeroth order polynomial we reduce the kriging model to an ordinary kriging method, where the outcome is modelled as ![]() , with

, with ![]() representing the constant mean among the

representing the constant mean among the ![]() data points considered. Otherwise, the model is generally named universal kriging, where the mean depends on the absolute location of the data points within the domain, meaning it can differ across regions of the region of interest.

data points considered. Otherwise, the model is generally named universal kriging, where the mean depends on the absolute location of the data points within the domain, meaning it can differ across regions of the region of interest.

The kernel on which the Gaussian process depends is something that measures similarity between observations ![]() , as is shown in Equation 2, where the kernel is split up between

, as is shown in Equation 2, where the kernel is split up between ![]() – the process variance – and

– the process variance – and ![]() – the correlation function between

– the correlation function between ![]() and

and ![]() .

.

(2) ![Rendered by QuickLaTeX.com \begin{align*} k(x,x') = & \sigma^2r(x,x') = \sigma^2r_{xx'} \\ & \forall x, x' \in B\ (= \prod_{j=1}^d[a_j, b_j]), \end{align*}](https://nekst-online.nl/wp-content/ql-cache/quicklatex.com-abbf958aa4e9c11cdf7681db6dec9965_l3.png)

where ![]() is the hypercube defined as

is the hypercube defined as ![]() , with

, with ![]() ,

, ![]() and

and ![]() for

for ![]() , indicating the domains of the component values of

, indicating the domains of the component values of ![]() .

.

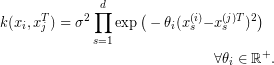

This implies that the Gaussian process will give deviations to points of interest that are very close to points we know their exact value of differently to those that are relatively far away. This can be seen from Equation 3, which shows the Gaussian exponential kernel, one that is often used in the kriging literature, both in simulation and in machine learning.

(3)

Estimation issues

Kriging models are able to produce quite accurate estimates of functional values, because they are able to incorporate highly nonlinear effects. There are, however, two substantial issues that need to be addressed before we can go about creating our metamodel. The first issue concerns the problem kriging experiences when the data is very high dimensional: as the dimensionality increases kriging becomes significantly slower in estimating a model, something which counteracts our objective of faster calculation of projected profits for all scenarios. Although we have a single output, the objective value of the optimization problem, the inputs we have can be as numerous as 50,000, as we have a very large set of crude oil characteristics and prices. Clearly, this will be problematic for the efficiency of the kriging model, as with such a vast number of correlations that need to be calculated the time to construct the model could well exceed the time that is used to calculate the profits through the regular optimization process. Thus, we need to decrease the number of inputs of the model while maintaining as much explanatory power as possible.

One way we could achieve this is by screening the data. The purpose of screening is the identification and retention of the input variables and interaction terms that are most important in explaining the outcome and the removal of those that are least important. In the context of metamodeling this is often done by use of experimental design (also called design of experiments), where a selection of the data ![]() are used to calculate their respective outcomes

are used to calculate their respective outcomes ![]() , with

, with ![]() , and subsequently used in sensitivity analysis so that their importance to the outcome can be analyzed. In order to identify many effects (individual and interaction effects of the variables), we need to select the vectors to observe in a way that incorporates as much variation as possible. [4] defines a method that creates supersaturated designs (

, and subsequently used in sensitivity analysis so that their importance to the outcome can be analyzed. In order to identify many effects (individual and interaction effects of the variables), we need to select the vectors to observe in a way that incorporates as much variation as possible. [4] defines a method that creates supersaturated designs (![]() ) by `near-orthogonality’. Loss of orthogonality is always the case in supersaturated designs as the number of sampled points is smaller than the number of dimensions in the data, and thus certain effects can be hard to distinguish. Thus, in order to come as close to orthogonality as possible, [4] defines the function

) by `near-orthogonality’. Loss of orthogonality is always the case in supersaturated designs as the number of sampled points is smaller than the number of dimensions in the data, and thus certain effects can be hard to distinguish. Thus, in order to come as close to orthogonality as possible, [4] defines the function ![]() , which measures the correlation between two columns

, which measures the correlation between two columns ![]() and

and ![]() of the design matrix. A value of

of the design matrix. A value of ![]() would mean the two columns are orthogonal. This method uses a design matrix which codes its variable values to plus or minus one, however, meaning that the variable either takes a high or low value, respectively. The downside of this is that the variables are set at either one of two possible values, and thus mainly focusses on linear effects, rather than being able to identify nonlinear effects. To identify those nonlinear effects we would need more points of data and the screening process would be more time consuming. However, in our situation the identification of nonlinear effects might be desirable, as generally the chemical processes that are modelled contain nonlinear effects. In [3] variables are screened in another way. They start out by grouping all variables into a single large group and assess whether or not the group has a joint significant effect on the outcome when their values are changed in tandem. If this is the case, the group is split up into two new groups and these groups are tested again. Unimportant groups are discarded, and the method continues until all important factors have been assessed individually and all unimportant factors have thus been discarded along the way. This method is called sequential bifurcation, and was first developed by [2].

would mean the two columns are orthogonal. This method uses a design matrix which codes its variable values to plus or minus one, however, meaning that the variable either takes a high or low value, respectively. The downside of this is that the variables are set at either one of two possible values, and thus mainly focusses on linear effects, rather than being able to identify nonlinear effects. To identify those nonlinear effects we would need more points of data and the screening process would be more time consuming. However, in our situation the identification of nonlinear effects might be desirable, as generally the chemical processes that are modelled contain nonlinear effects. In [3] variables are screened in another way. They start out by grouping all variables into a single large group and assess whether or not the group has a joint significant effect on the outcome when their values are changed in tandem. If this is the case, the group is split up into two new groups and these groups are tested again. Unimportant groups are discarded, and the method continues until all important factors have been assessed individually and all unimportant factors have thus been discarded along the way. This method is called sequential bifurcation, and was first developed by [2].

The second problem we have concerns which data points we should take; if we have plenty of historical data available (assuming that it is representative) we could quite easily use this to construct our model, but if we do not have this data available we are in a different situation. In that case we want to calculate the certain scenarios of the refinery planning by the optimization process, and use the results to construct our model. We do, however, require those sample points to be informative about the whole population, because if we only sample `light’ crude scenarios we will most likely incorrectly predict the profits of `heavy’ crude scenarios. The classic simulation problems will apply experimental design here as well, spreading out points over the domain (either at the edges or uniformly distributed), and this is something that would be applicable here too. Particularly the uniformly distributed data points are applicable, as those are more useful in estimating nonlinear effects. However, we could also apply clustering on the crude characteristics, categorizing the crudes in several groups, with crudes belonging to the same group having characteristics that are relatively similar. If we would then take samples from those groups we would be getting information that is representative for as many others as possible.

Conclusion

With these methods we will estimate the refinery planning scenarios faster than the optimization problem can calculate them, and provide the refineries with information that is more up to date than the scenarios they currently have. The resulting methodology is not only applicable to the refining business, but finds applications in many situations where high dimensionality poses an issue. The problem of data with very high dimensions is something that is experiencing a lot more research than in previous decades, as with the rise of big data it is becoming more relevant and “simple” increases in computing power no longer suffice to work around these problems. This research will implement several recent developments from this field in order to create a fast metamodel to aid refineries in keeping their data up to date.

References

[1] C.C. Aggarwal, A. Hinneburg, and D.A. Keim, On the Surprising Behavior of Distance Metrics in High Dimensional Space, 2001

[2] B.W.M. Bettonvil, 1990, Detection of important factors by sequential bifurcation, Universiteit van Tilburg, PhD Thesis

[3] J.P.C. Kleijnen, B. Bettonvil, and F. Persson, 2006, Screening for the important factors in large discrete-event simulation models: sequential bifurcation and its applications, Screening, Springer, 287-307

[4] D.K.J. Lin, Dennis, 1995, Generating systematic supersaturated designs, Technometrics, 37, 2, 213-225

Text by: Paul Visser